Snowflake Data Cloud is built to power applications with no restrictions on performance, parallelism, or scale. Snowflake is trusted by fast-growing software firms to handle all infrastructure complexities, allowing you to focus on inventing your app.

How does the snowflake data cloud function, and what is it?

Snowflake is built on the cloud infrastructures of Amazon Web Services, Microsoft Azure, and Google. There are no hardware or software options to choose from, install, configure, or administer. As a result, it’s great for companies that don’t want to devote resources to in-house server setup, maintenance, and support.

The design and data exchange capabilities of Snowflake, on the other hand, set it apart. Customers can utilize and pay for storage and computation separately thanks to the Snowflake design, which allows storage and computing to scale independently. Organizations can also share controlled and protected data in real time thanks to the sharing capabilities.

Snowflake advantages for your company

Snowflake is a cloud-native data warehouse that addresses many of the difficulties that plague previous hardware-based data warehouses, including restricted scalability, data transformation concerns, and delays or failures caused by heavy query volumes. Here are five ways that Snowflake can help your company.

Performance and quickness

Snowflake is used to manage around 500TB of data sets. If you need to load data quicker or run a high number of queries, you may scale up your virtual warehouse to make use of more compute resources thanks to the cloud’s elasticity. The virtual warehouse can then be shrunk, and you will only be charged for the time you used it.

Structured and semi-structured data storage and support

For analysis, you can aggregate structured and semi-structured data and load it into a cloud database without first converting or transforming it into a set relational schema. Snowflake improves the way data is automatically saved and queried.

Data exchange that is seamless

The architecture of Snowflake allows users to share data with one another. It also enables enterprises to share data with anyone, whether or not they are a Snowflake customer, via reader accounts generated straight from the user interface. This functionality allows the provider to create and manage a Snowflake account for a customer.

Accessibility and concurrency

Concurrency issues might arise when too many queries compete for resources in a typical data warehouse with a large number of users or use cases.

Snowflake’s multi-cluster architecture solves concurrency problems by ensuring that queries from one virtual warehouse never affect queries from another. Each virtual warehouse has the ability to scale up or down as needed. Data analysts and data scientists may get what they need right away without having to wait for other loading and processing operations to finish.

Security and availability

Snowflake is distributed throughout the platform’s availability zones, which are either AWS or Azure, and is designed to operate constantly while tolerating component and network failures with minimal user impact.

Features

Computing and storage must be kept separate.

Snowflake divides computing and storage to allow each to scale independently and efficiently.

Auto-scaling

Snowflake scales computational resources up and down automatically for near-infinite concurrency without affecting performance or requiring data rearrangement.

All you have to do is pay for the services that you actually utilize.

With per-second compute pricing, you can align expenses with your product margins and avoid paying for unused resources.

Semi-structured data is supported natively.

Without having to define schemes in advance, Ingest queries JSON, Parquet, Avro, ORC, and XML right away.

Cloud-to-cloud replication with global replication

With global replication for high availability, data durability, and disaster recovery, you may deploy on any cloud and in any region.

Cloning with no copies

Create sandboxes and pilots quickly with real-time data without the burden of copying and transferring it.

Traveling through time

For up to 90 days, you can easily view historical data that has been updated or removed.

Drivers and connectors

ODBC, JDBC, Node, Python, .NET, Go, Spark, and Kafka are just a few of the native clients and connectors that can be readily integrated. PHP and Ruby drivers will be available soon.

SQL ANSI

Use SQL to manage all of your data, whether it’s structured or semi-structured, using joins between data types, databases, and external tables.

Functions that are defined by the user

To do custom calculations, extend Snowflake with in-engine JavaScript functions.

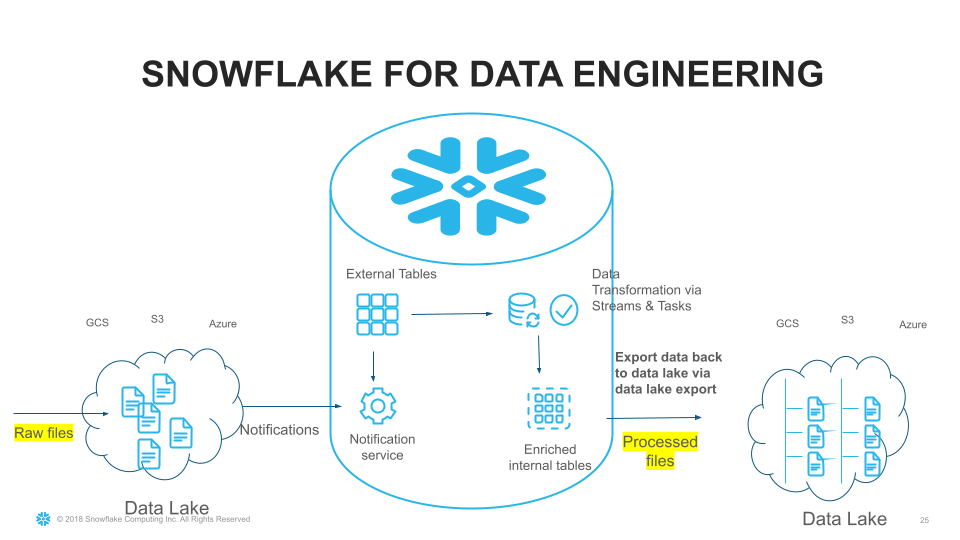

Data pipelines that are always running

With Snow-pipe and third-party data solutions, automate the steps for transforming and optimizing continuous data loads.

Data sharing that is safe

To establish a single source of truth across your ecosystem, share live data with your partners fast and securely without copying or relocating it.

API for SQL

Make SQL calls to Snowflake pro grammatically, without the need for client drivers or additional API management infrastructure.

Tables on the outside

Without consuming the data, query data in cloud object stores for extra insights.

Encryption and access controls

Role-based access controls (RBAC) and data encryption at rest and in transit can help you protect your customers’ data.

The following are some of the snowflake tasks:

- Snowflake is used to manage around 500TB of data sets.

- We’ve set up data ingestion pipelines from a variety of sources, including AWS S3, SFTP, Dropbox, and Email.

- ETL/ELT pipelines were implemented, processing gigabytes of data every day. In the ETL step, Data-bricks are used for non-SQL processing.

- Architect and design data schemes, tables, and views to take advantage of snowflake-specific capabilities such as cluster keys, micro partitions, and cloning, among other things.

- Snowflake can handle semi-structured data in JSON, PARQUET, and XML forms.

- Snowflake performance tuning was done extensively using data clustering, easing (local/remote) disc spilling, leveraging materialized views, warehouse scaling, and other techniques.

- Developed aggregation jobs that used UDFs, stored procedures, window functions, and aggregate functions to aggregate terabytes of time-series datasets.

- We connect to Snowflake using BI tools like Tableau, Power BI, MS Excel, and others to explore and analyze data.

- To automate batch jobs, we now use SQL.

- Jobs were implemented to track snowflake credit usage, long-running queries, warehouse utilization, and other metrics.

- Managing user and group provisioning, object access control, data security, data replication, and fail-over/fallback in Snowflake.